Computers, Monitors & Technology Solutions

NEW XPS LAPTOPS

Iconic Design. Now with AI.

Sleek laptops with Intel® Core™ Ultra processor and built-in AI – ready for every project.

ADVANCING SUSTAINABILITY

Repurpose. Reuse. Rethink.

Drive change today by exploring our devices designed for sustainability.

WELCOME TO NOW

Learning to Grow with AI

With our smart infrastructure, businesses are cultivating new farming strategies.

Dell Technologies Showcase

Featured Products and Solutions

INSPIRON 14 2-IN-1 LAPTOP

Designed for Versatility

Explore with Inspiron 14 2-in-1, featuring built-in AI.

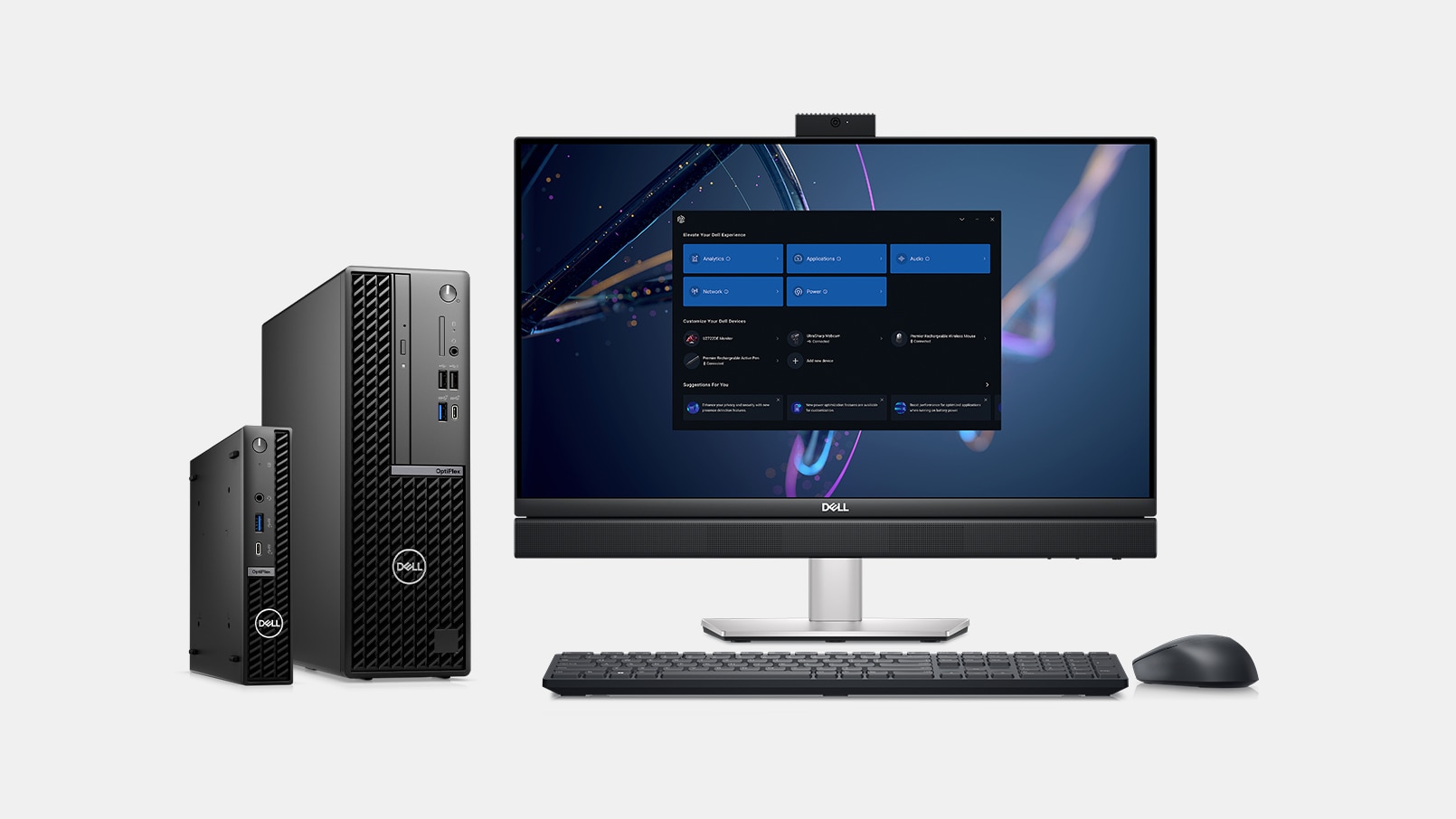

OPTIPLEX DESKTOP FAMILY

Intelligence Meets Simplicity

Engineered for reliable user experiences and simplified management.

ALIENWARE m18 R2

Stun Rivals. Stay in Awe.

Overpower the opposition with up to 270W of Total Power Performance on our most powerful 18-inch gaming laptop.

SERVERS, STORAGE, NETWORKING

Flexible, Scalable IT Solutions

Power transformation with server, storage and network solutions that adapt and scale to your business needs.

NEW DELL PREMIER WIRELESS ANC HEADSET

Turn Up the Quiet

World’s most intelligent wireless headset in its class* with dual directional AI-based noise cancelling.

Dell Support

We're Here to Help

From offering expert advice to solving complex problems, we've got you covered.

My Account

Create a Dell account and enroll in Dell Rewards to unlock an array of special perks.

Easy Ordering

Order Tracking

Dell Rewards

Dell Premier

Leverage hands-on IT purchasing for your business with personalized product selection and easy ordering via our customizable online platform.

Simplify Purchasing

Discover Insights

Shop Securely

DELL REWARDS

Shop More. Earn More.